FAQs on Big Data Analysis & Process Mining Projects

Wonhui Ko | Oct 14, 2018 | 6 min read

Intro

How are ‘data analysis’ and ‘big data analysis’ different? Our team often receives this question while working on projects with clients from various fields. We believe that addressing this curiosity will be helpful to many readers.

What is the difference between big data analysis and traditional data analysis?

Big data analysis involves creating new value by analyzing log data in conjunction with conventional data. First, we need to define ‘log data’ and ‘conventional data.’

· Conventional Data: Refers to data existing in databases built for the purpose of operating services and systems. Broadly, there are two types of conventional data: master data and transaction data. Master data includes meta-information like HR, system, etc. Transaction data refers to data that’s created, modified, or deleted instantly in the database. For instance, in an online shopping platform, data representing the delivery status of a product or the total order amount are examples of transaction data.

· Log Data: Considered as trace data of certain object. Originally, log data was recorded to trace the root cause when a system malfunction occurred. However, with the advent of the big data era, the purpose of log data has faced an dramatic change. Many log programs were developed, and they began recording events over specific periods. Whether the data is structured or unstructured, regardless of the file format or where it’s stored, if it’s data where events are recorded to trace a specific subject, I consider it as log data. A prime example of a log file is the web log file. Typically, a web log file captures user behavior patterns, recording information like cookies, timestamps, IPs, URLs, referrers, etc.

Data analysis has been consistently conducted even before. Common examples are trends in sales, sales rates, and margin rates that we often see. But on the other hand, big data analysis can be said to occur when, in addition to this, new value or information is provided using log data.

What new value does big data analysis provide?

Traditional data analysis has already provided us with various values. Not only monitoring various metrics like sales trends and sales rates but also excavating insights using econometric models is possible. A prime example is CRM. It enables extracting various insights, such as customer segmentation, customer loyalty, and managing departing customers. Through this, it was possible to establish marketing strategies and predictions and build recommendation systems and etc.

However, by utilizing log data in such analysis, we can create even more new value and services. With the customer behavior patterns found in log data, we can segment customers based on their behavior patterns. Also, by tracking back where a customer left, we can more accurately predict departure scenarios and offer more effective promotions. If we better understand a customer’s pattern, we can also provide better recommendations.

What value does process mining provide?

“In the end, It is the process that matters (and no the data or the software)

Not just patterns and decisions, but end-to-end processes.”

– W. Van der Aalst

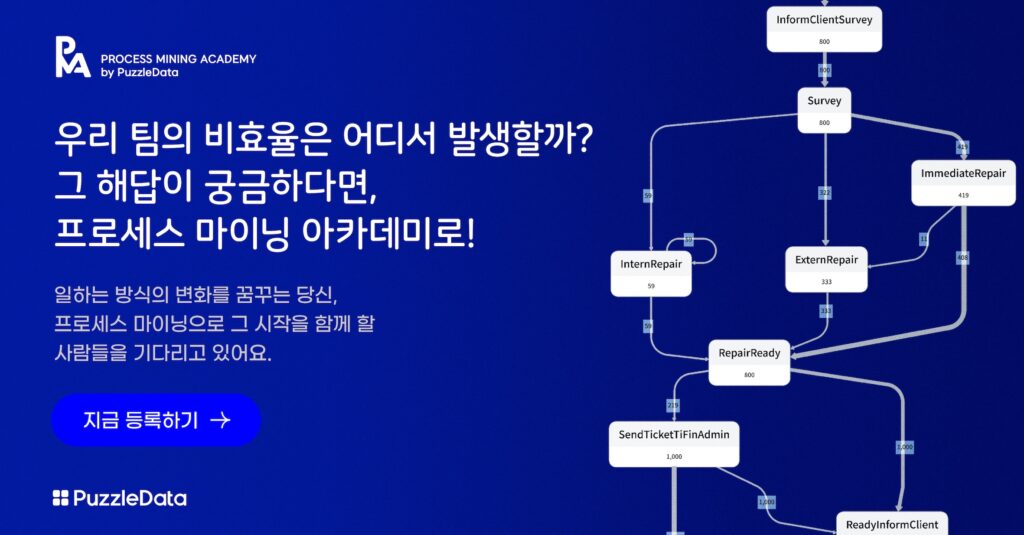

The most distinct aspect of process mining compared to traditional data analysis is its focus on the end-to-end process. Process mining is the most powerful tool for analyzing log data. It allows for a process-wise analysis of the traces left by logs.

Let me give you an example. Imagine a consumer using an online retail mall and a service provider who wants to understand the behavior of that consumer. The service provider records customer behavior through cookies, sessions, IPs, domains, devices used, URLs, referrers, and so on. This collected web log represents the traces left by customers using the service.

A consumer visits the site to make a purchase, searches for products, compares them, adds them to the cart, and then makes a purchase. This process is collected as log data on the service provider’s server.

Through this data, process mining helps discover and understand the ‘online shopping behavior process’ of the consumer. It can vividly visualize the actual flow of how a purchasing customer behaved on the web.

Are there any points of caution when collecting logs for process mining?

Process mining is a data science that discovers/enhances process models through log data. Process mining stands at the midpoint between process analysis and data mining. A thorough understanding of which process you want to analyze in detail is crucial to properly define the Case ID and Activity, and to extract the correct log data.

To learn more about the minimum requirements for process mining, read this article

– What are the Three Key Elements of Process Mining?

Understanding the nuts and bolts of process mining can be difficult for those who are new to the field. In such cases, consulting with a process mining expert is highly recommended. PuzzleData Team is always open to your inquiries.

Redefine your big data analysis experience with ProDiscovery.

Developed by PuzzleData, ProDiscovery is a process intelligence platform that offers a variety of process analysis, process mining, and big-data analysis functions. Obtain new insights through log data and unearth your process potential.